Militant Groups Experimenting With AI; Global Security Risks Projected to Escalate

- byAman Prajapat

- 16 December, 2025

Introduction: A New Front in Global Security

As the digital revolution accelerates into every corner of human endeavor, artificial intelligence—a powerful tool for innovation—is increasingly being weaponized by bad actors around the globe. Most recently, national security experts and intelligence officials have documented a growing trend: militant and extremist groups are not merely observing the rise of AI—they are experimenting with it.

This development marks more than a technological curiosity. It represents a potential paradigm shift in how nonstate actors project influence, recruit followers, wage information warfare, and possibly even conduct cyberattacks or biological assaults. The threat is not hypothetical; it’s already emerging, and the risks are expected to grow.

The Groups and Their Technological Trajectories

Among those experimenting with artificial intelligence are extremist organizations rooted in violent ideologies—most notably affiliates of the Islamic State (IS) and similar groups that operate as loose, decentralized networks. Once territorial insurgencies, many such groups pivoted early to digital platforms for messaging and recruitment. Today, with AI tools more widely accessible than ever, this digital pivot is deepening.

A user on a pro-IS forum recently urged fellow supporters to integrate AI into their online operations, pointing not to a complex strategy, but to the ease of use that modern AI provides—drawing attention to how accessible these powerful tools have become even for resource-limited actors.

Generative AI programs like large language models and deepfake generators have emerged as force multipliers. With them, a small group—or even an individual with just a web connection—can generate propaganda at scale, refine messaging for recruitment, and produce disinformation tailored to different audiences.

Recruitment and Radicalization: AI’s Role in Online Messaging

One of the most immediate uses of AI by militant groups is in crafting propaganda and recruitment materials. Unlike earlier methods, which relied primarily on human propagandists and basic video editing tools, AI can produce content that feels more “authentic” and polished. Generative AI enables:

Large-scale production of text and visuals tailored to specific demographics.

Deepfake images and videos that mimic real events or portray fabricated scenes.

Audio generated to sound like known figures or voices, sometimes in multiple languages.

Experts believe that AI-generated content can amplify existing narratives, making them more persuasive to susceptible audiences and harder for typical content filters to detect.

Militant groups already experimented with AI-generated visuals during the Israel-Hamas conflict, circulating manipulated images that depicted distressing scenes of children and civilians in ways that fueled outrage and increased polarization.

Deepfakes and Disinformation: A Growing Tool for Chaos

Deepfakes—synthetic media that replaces or alters a person’s likeness—represent one of the most troubling aspects of AI misuse. Extremist organizations can use deepfakes to:

Mimic leaders or spokespeople issuing commands or messages that attract recruits.

Fabricate scenes of violence or conquest to exaggerate perceived success.

Spread misinformation after real events to distort public perception or spur fear.

Deepfake technology once required significant technical expertise. Today, it is accessible through user-friendly AI platforms that require little more than a few clicks. This democratization of deepfake creation significantly lowers the barrier for extremist use.

When amplified by social media algorithms—which often prioritize engagement over truth—such content can spread quickly, shaping narratives and inflaming tensions far beyond the origin point.

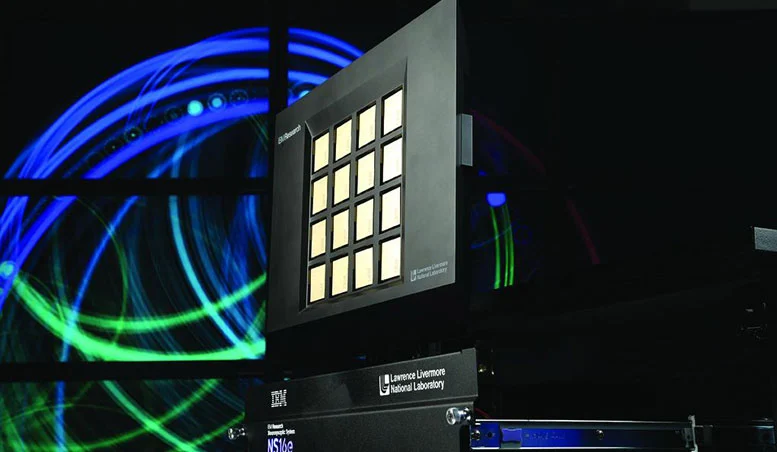

Cyberattacks and Automated Warfare

Beyond propaganda, AI’s impact on cyber warfare is also a growing concern. As tools become more sophisticated, extremist groups could harness AI to enhance cyberattacks by:

Automating phases of a cyber intrusion.

Scanning for vulnerabilities in networks or critical infrastructure.

Generating phishing campaigns that mimic trusted figures or institutions.

Intelligence experts acknowledge that while militant groups still lag behind state actors like China, Russia, or Iran in technical sophistication, the rapid evolution of AI tools could narrow this gap, especially because even basic AI combined with social engineering can produce outsized effects.

Biological and Chemical Threats: A Far-Reaching Danger

Perhaps most alarmingly, some analysts worry about the biological and chemical weaponization potential of AI. While these uses remain speculative and aspirational, they are not dismissed out of hand. As AI models become capable of assisting in complex problem solving, there’s fear that extremist groups could use them to design or simulate toxic agents or delivery systems—possibly overcoming technical knowledge barriers they would have faced in the past.

Government threat assessments, including those by homeland security agencies, have flagged this possibility as part of a broader AI risk landscape.

Policy Responses and Security Debates

In response to these expanding dangers, lawmakers and security officials are debating new regulatory frameworks. In the United States, for example, proposed legislation would require annual risk assessments by homeland security officials to monitor how extremist groups might be leveraging AI.

Officials have also called for improved information sharing between AI developers and law enforcement or intelligence agencies to better understand and mitigate misuse. This could include data on malicious queries or patterns of abuse of openly accessible tools.

Some researchers argue that industry self-regulation alone is insufficient, and that proactive government oversight and international cooperation are needed to keep pace with technological misuse.

The Future: Evolving Threats and Enduring Challenges

As AI tools become more powerful, affordable, and ubiquitous, the potential for misuse grows correspondingly. The same innovation that helps doctors diagnose disease faster and designers create art now also provides extremist organizations with potent new weapons in the information battlefield.

Security analysts caution that the story of AI and militancy is still in its early chapters. Just as email and social media were once new and unregulated, AI will continue to outpace existing institutional safeguards unless policymakers, technologists, and civil society act decisively.

In the years ahead, balancing the vast benefits of artificial intelligence with the grave risks posed by its misuse will be one of the defining security challenges of the digital age.

Note: Content and images are for informational use only. For any concerns, contact us at info@rajasthaninews.com.

TSMC Optimistic Amid...

Related Post

Hot Categories

Recent News

Daily Newsletter

Get all the top stories from Blogs to keep track.

_1771820398.jpg)

_1771647487.jpeg)

_1772465804.jpg)

_1772465408.jpg)

_1772464394.jpg)

_1772463878.jpg)